|

Autonomous Racing

1

f1tenth Project Group of Technical University Dortmund, Germany

|

|

Autonomous Racing

1

f1tenth Project Group of Technical University Dortmund, Germany

|

Public Member Functions | |

| def | __init__ (self) |

| def | replay (self) |

| def | get_epsilon_greedy_threshold (self) |

| def | select_action (self, state) |

| def | get_reward (self) |

| def | get_episode_summary (self) |

| def | on_complete_step (self, state, action, reward, next_state) |

Public Member Functions inherited from training_node.TrainingNode Public Member Functions inherited from training_node.TrainingNode | |

| def | __init__ (self, policy, actions, laser_sample_count, max_episode_length, learn_rate) |

| def | on_crash (self, _) |

| def | get_episode_summary (self) |

| def | on_complete_episode (self) |

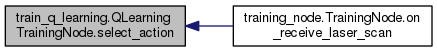

| def | on_receive_laser_scan (self, message) |

| def | on_complete_step (self, state, action, reward, next_state) |

| def | check_car_orientation (self) |

| def | on_model_state_callback (self, message) |

Public Member Functions inherited from reinforcement_learning_node.ReinforcementLearningNode Public Member Functions inherited from reinforcement_learning_node.ReinforcementLearningNode | |

| def | __init__ (self, actions, laser_sample_count) |

| def | perform_action (self, action_index) |

| def | convert_laser_message_to_tensor (self, message, use_device=True) |

| def | on_receive_laser_scan (self, message) |

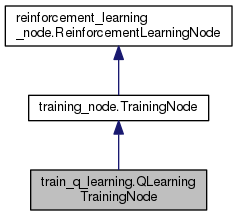

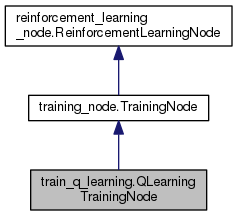

ROS node to train the Q-Learning model

Definition at line 17 of file train_q_learning.py.

| def train_q_learning.QLearningTrainingNode.__init__ | ( | self | ) |

Definition at line 21 of file train_q_learning.py.

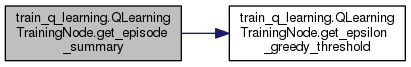

| def train_q_learning.QLearningTrainingNode.get_episode_summary | ( | self | ) |

Definition at line 93 of file train_q_learning.py.

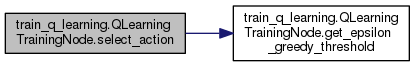

| def train_q_learning.QLearningTrainingNode.get_epsilon_greedy_threshold | ( | self | ) |

| def train_q_learning.QLearningTrainingNode.get_reward | ( | self | ) |

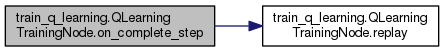

| def train_q_learning.QLearningTrainingNode.on_complete_step | ( | self, | |

| state, | |||

| action, | |||

| reward, | |||

| next_state | |||

| ) |

Definition at line 100 of file train_q_learning.py.

| def train_q_learning.QLearningTrainingNode.replay | ( | self | ) |

| def train_q_learning.QLearningTrainingNode.select_action | ( | self, | |

| state | |||

| ) |

Definition at line 70 of file train_q_learning.py.

| train_q_learning.QLearningTrainingNode.memory |

Definition at line 30 of file train_q_learning.py.

| train_q_learning.QLearningTrainingNode.net_output_debug_string |

Definition at line 78 of file train_q_learning.py.

| train_q_learning.QLearningTrainingNode.optimization_step_count |

Definition at line 31 of file train_q_learning.py.